Abstract

Context

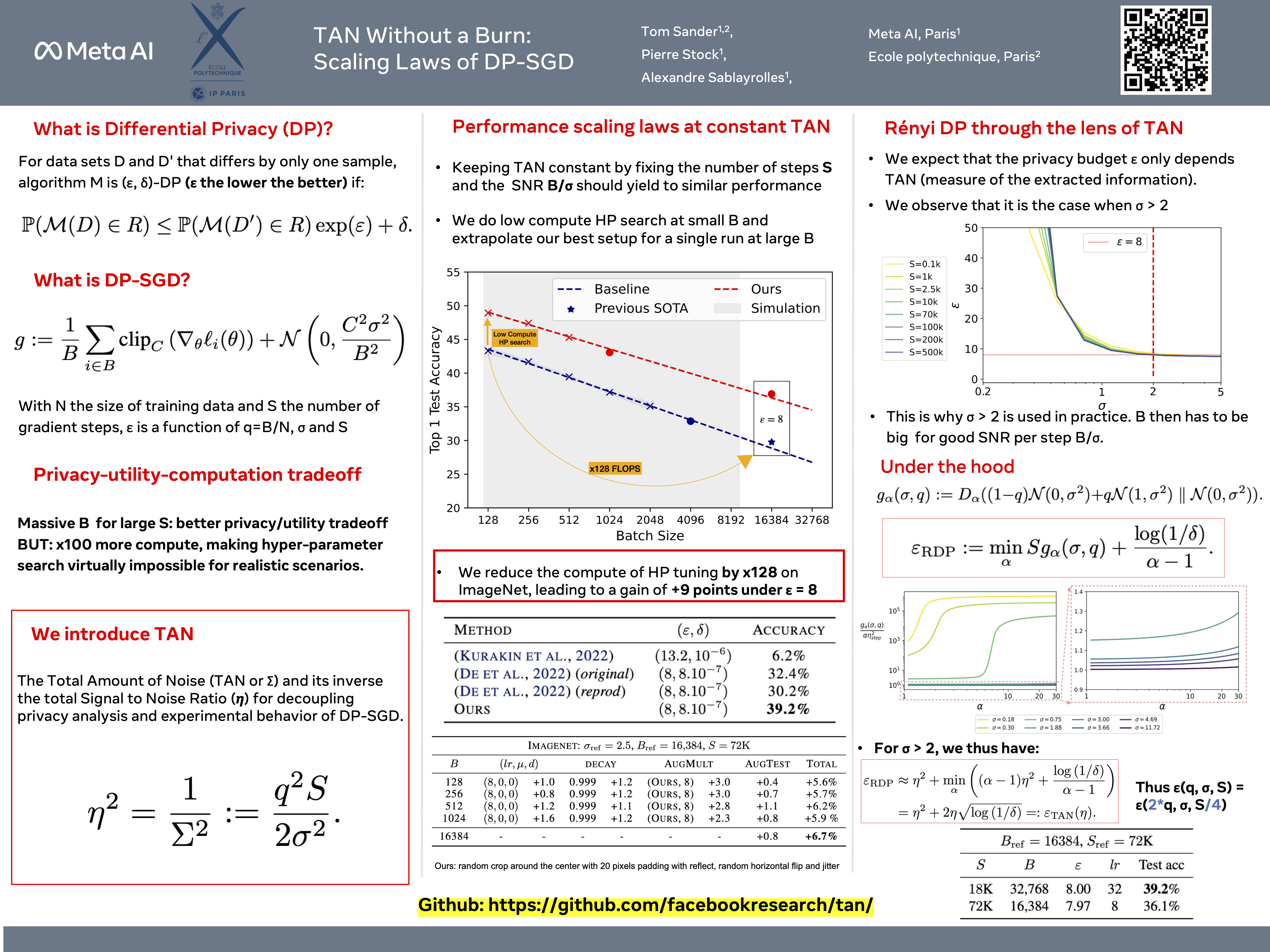

- Differential Privacy has become the gold standard to ensure that a machine learning model does not memorize its training data.

- Differentially Private methods for training Deep Neural Networks (DNNs) have progressed recently, in particular with the use of massive batches and aggregated data augmentations for a large number of training steps. These techniques require much more computing resources than their non-private counterparts, shifting the traditional privacy-accuracy trade-off to a privacy-accuracy-compute trade-off.

- We decouple privacy analysis and experimental behavior of noisy training to explore the trade-off with minimal computational requirements.

How?

- We first use the tools of Rényi Differential Privacy (RDP) to highlight that the privacy budget, when not overcharged, only depends on the total amount of noise (TAN) injected throughout training. We then derive scaling laws for training models with DP-SGD to optimize hyper-parameters with more than a \(\times 100\) reduction in computational budget.

- We apply the proposed method on CIFAR-10 and ImageNet and, in particular, strongly improve the state-of-the-art on ImageNet with a \(+9\) points gain in top-1 accuracy for a privacy budget \(\varepsilon = 8\).

When?

- The method could apply whenever a pratictioner wants to train a machine learning model with Differential Privacy guarantees. They would just need to simulate training using the same TAN but with much smaller batch sizes and perform hyper-parameter tuning.

- At the end, these hyper-parameters can be used but this time with the large batch that ensures good privacy guarantees.